On-Device AI: Why Smarter, Private Apps Are the Next Wave

On-device AI: why the next wave of smarter, private apps matters

The move from cloud-only intelligence to on-device processing is reshaping how apps behave and how people expect technology to protect their data.

Running models locally—on phones, laptops, cameras, or sensors—delivers faster responses, greater privacy, and lower network dependence. That combination is unlocking features that feel immediate and trustworthy.

Why on-device matters

– Latency: Local inference eliminates round-trip delays to remote servers, so voice assistants, image recognition, and predictive typing respond instantly.

– Privacy: Data can remain on the device instead of flowing to centralized servers, reducing exposure and simplifying compliance with tighter privacy expectations.

– Offline reliability: Apps that work without connectivity are more resilient for users in transit or in low-coverage areas.

– Cost and scalability: Shifting inference to endpoints reduces cloud compute and bandwidth costs for service providers while distributing load across devices.

Hardware and software advances

Modern devices increasingly include dedicated neural processing units (NPUs) and GPUs tuned for machine learning workloads.

Software frameworks have evolved to match: TensorFlow Lite, ONNX Runtime, Core ML, and TinyML toolchains optimize models for constrained memory and power envelopes.

Techniques like quantization, pruning, and knowledge distillation shrink model size with minimal quality loss, while sparsity and model compilers help exploit hardware acceleration.

Practical benefits you already notice

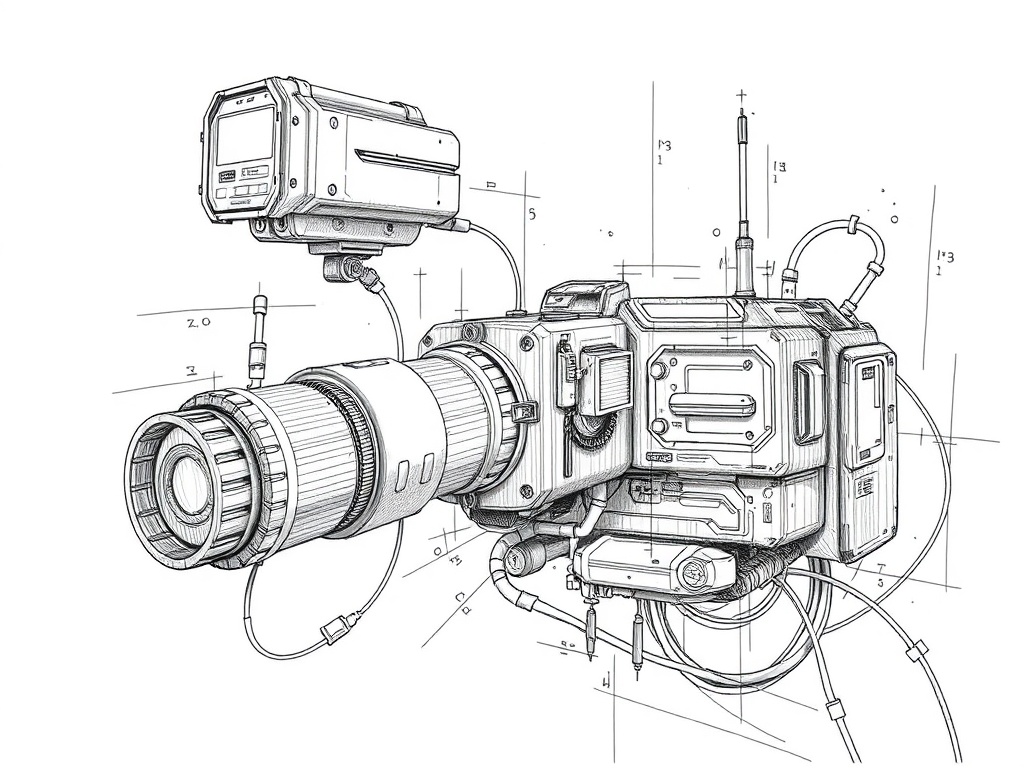

– Smarter camera modes that apply scene detection and portrait effects in real time

– Keyboard suggestions and autocorrect that adapt to your personal style while keeping keystrokes local

– Health and activity recognition that preserves sensitive sensor data on-device

– Faster wake-word detection that listens for commands without sending audio streams to the cloud

Design tradeoffs and challenges

Local models must balance accuracy against footprint and energy consumption. Developers need to measure inference latency, peak memory usage, and battery drain across target devices. Security is also important: on-device models can be extracted or manipulated unless protected through techniques like model encryption and secure enclaves. Updating models requires careful orchestration—either through secure incremental downloads or hybrid strategies that offload heavy tasks to the cloud when appropriate.

Best practices for builders

– Start with a clear partition: keep sensitive preprocessing and inference local; reserve cloud for heavy training, personalization aggregation, or complex tasks that exceed device capability.

– Use quantization and pruning early in the pipeline to spot accuracy/performance tradeoffs.

– Profile on real hardware, not just emulators, to reveal thermal throttling and battery impact.

– Implement secure model delivery and versioning to defend intellectual property and support rollbacks.

– Design for graceful degradation: offer limited functionality when offline or when using lower-capacity devices.

What consumers should look for

– Check app privacy labels and settings for on-device processing options.

– Prefer devices with dedicated NPUs or strong acceleration if you rely on advanced on-device features.

– Keep apps and device firmware updated to receive model improvements and security patches.

– Be mindful of battery tradeoffs with aggressive always-on features like continuous sensor monitoring.

The hybrid future

Edge and cloud will coexist. Lightweight local models will handle latency-sensitive and privacy-critical tasks, while cloud systems will continue to power large-scale training, cross-user personalization, and compute-heavy analytics. That hybrid approach delivers the best of both worlds: immediate, private interactions plus the long-term benefits of centralized learning.

As the ecosystem matures, expect more apps to prioritize on-device intelligence. That means better responsiveness, tighter privacy controls, and features that continue to work when the network doesn’t—making technology feel more personal and dependable.